Embarking on the journey of building a machine-learning pipeline is like diving into an ocean of endless possibilities. It’s an exhilarating process that promises insights, innovation, and transformation. However, just like navigating rough waters, there are challenges to face along the way. In this blog post, we will explore the common hurdles in constructing a machine-learning pipeline and discover effective strategies to conquer them. So, buckle up your seatbelt as we dive deep into the realm of overcoming obstacles in this exciting technological landscape!

- The Challenges in Building a Machine Learning Pipeline;

- Data Collection and Preparation

- Feature Selection and Engineering

- Model Selection and Tuning

- Deployment and Monitoring

- Strategies to Overcome These Challenges;

- Collaborating with Domain Experts:

- Following Best Practices and Standards:

- Continuous Testing and Validation:

- Utilizing Automation Tools:

- Real-life Examples of Overcoming Pipeline Challenges;

- Conclusion

The Challenges in Building a Machine Learning Pipeline;

Building a machine learning pipeline comes with a set of challenges that can test the resilience of even the most seasoned data scientist. One major hurdle is data collection and preparation, where ensuring data quality, handling missing values, and dealing with unstructured data can be time-consuming tasks.

Data Collection and Preparation

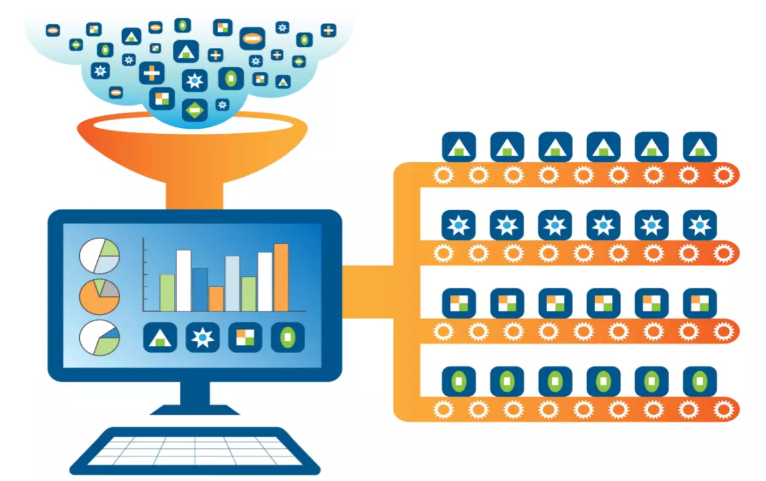

Data collection and preparation are the foundational steps in building a successful machine learning pipeline. It involves gathering raw data from various sources, cleaning it to remove inconsistencies or missing values, and transforming it into a format suitable for analysis.

One of the challenges in this phase is dealing with large volumes of data that may be messy or unstructured. This can lead to issues like data duplication, inaccuracies, or biases which could impact the performance of the model.

Moreover, ensuring data quality and relevance is crucial for accurate predictions. Data scientists often spend a significant amount of time on tasks like normalization, encoding categorical variables, and handling outliers to make sure the input data is reliable for training models.

By employing techniques such as data profiling, exploratory data analysis (EDA), and using tools like pandas in Python or SQL queries for database operations, practitioners can streamline these processes effectively.

Feature Selection and Engineering

When it comes to building a machine learning pipeline, one of the key challenges is feature selection and engineering. This stage involves determining which data attributes will be most relevant for training the model and how to transform them effectively.The process of feature selection requires careful consideration of both quantitative and qualitative factors. It’s crucial to choose features that have a strong correlation with the target variable while also avoiding multicollinearity among predictors.

Feature engineering, on the other hand, involves creating new features or transforming existing ones to enhance predictive performance. Techniques like normalization, encoding categorical variables, and creating interaction terms can all play a vital role in improving model accuracy.

By leveraging advanced algorithms such as recursive feature elimination or principal component analysis, data scientists can streamline the feature selection process and identify the most informative attributes for training their models effectively.

Model Selection and Tuning

When it comes to building a machine learning pipeline, one of the key challenges lies in model selection and tuning. With a plethora of algorithms available, choosing the most suitable one can be daunting. Each algorithm has its strengths and weaknesses, making it crucial to understand their nuances.

Model tuning is another intricate task that requires fine-tuning hyperparameters to optimize performance. This process involves balancing bias and variance while avoiding overfitting or underfitting the data. Grid search, random search, or Bayesian optimization are commonly used techniques for this purpose.

Cross-validation plays a vital role in evaluating model performance across different subsets of data. It helps in assessing how well the selected model generalizes to unseen data. Additionally, ensembling methods like bagging and boosting can further enhance predictive accuracy by combining multiple models.

Regularly monitoring the model’s performance post-deployment is essential for detecting any drift or degradation in results over time. Adopting strategies like automated retraining schedules can help maintain optimal performance levels as new data streams in seamlessly.

Deployment and Monitoring

Once your machine learning model is trained and ready, the next critical step is deployment and monitoring. Deploying a model involves making it available for use in real-world applications, which can be a challenging task due to compatibility issues or infrastructure constraints. Monitoring the deployed model is essential to ensure its performance remains optimal over time. This includes tracking key metrics, detecting any drift in data distribution, and addressing any potential issues promptly.

Implementing robust monitoring mechanisms can help detect anomalies early on and prevent unexpected failures that could impact business operations. It’s crucial to establish clear protocols for ongoing monitoring and have systems in place to alert teams when intervention is needed.

Strategies to Overcome These Challenges;

Automation tools can streamline the machine learning pipeline by automating repetitive tasks like data preprocessing, model training, and deployment. This not only saves time but also reduces the chances of human error, leading to more efficient workflows.

Collaborating with Domain Experts:

Domain experts bring valuable insights into the data and help in understanding the context behind it. By collaborating with domain experts, you can ensure that your machine learning models are built on a solid foundation of domain knowledge, increasing their accuracy and relevance.

Collaborating with domain experts is crucial in building a successful machine learning pipeline. Domain experts bring valuable insights and knowledge that can significantly impact the quality of the model. By working closely with experts in the field, data scientists can better understand the nuances of the problem at hand.

Domain experts can guide feature selection and engineering, ensuring that relevant variables are included in the model. Their input helps to improve the accuracy and relevance of predictions. Additionally, collaborating with domain experts can help to identify potential biases in the data that might otherwise go unnoticed.

Following Best Practices and Standards:

Adhering to best practices and industry standards is crucial for building a robust machine learning pipeline. By following established guidelines, you can maintain consistency across different stages of the pipeline and ensure that your models meet quality benchmarks.

When it comes to building a successful machine learning pipeline, following best practices and standards is crucial. By adhering to industry-recognized guidelines, you can ensure the reliability and reproducibility of your models.

One key aspect of this is maintaining clear documentation throughout the pipeline process. Documenting each step taken, from data collection to deployment, helps in tracking changes and understanding decisions made along the way.

Another essential practice is conducting thorough testing and validation at every stage. This involves assessing model performance using diverse datasets, ensuring robustness across different scenarios.

Continuous Testing and Validation:

Regular testing and validation throughout the development process are essential for identifying any issues or discrepancies early on. By continuously assessing the performance of your models against new data or scenarios, you can iterate quickly and improve their overall effectiveness.

Continuous testing and validation are crucial steps in the machine learning pipeline. By consistently evaluating models against new data, you ensure that your predictions remain accurate over time. This process helps to identify any degradation in performance or issues with the model’s generalization capabilities.

Implementing automated tests can streamline this process, allowing for rapid feedback on model performance. By setting up a robust validation framework, you can catch errors early and make necessary adjustments before deployment.

Regularly monitoring key metrics such as accuracy, precision, recall, and F1 score enables you to track model performance effectively. This ongoing evaluation ensures that your machine learning system continues to meet the desired objectives and remains reliable in real-world scenarios.

Utilizing Automation Tools:

Imagine a world where mundane tasks in building a machine learning pipeline can be automated. Sounds like a dream, right? Well, with the advancements in technology, utilizing automation tools has become increasingly crucial in streamlining the process.

Automation tools can help simplify data collection and preparation by automatically fetching and cleaning datasets, saving valuable time for data scientists to focus on more complex tasks. Additionally, these tools can assist in feature selection and engineering by identifying relevant features efficiently without manual intervention.

Real-life Examples of Overcoming Pipeline Challenges;

One real-life example of overcoming challenges in building a machine learning pipeline is seen in the healthcare industry. A team of data scientists and medical professionals collaborated to develop a predictive model for early detection of diseases. They faced hurdles in collecting diverse and high-quality data, but by leveraging automation tools for data preprocessing, they were able to streamline the process.

In another instance, a retail company encountered difficulties in feature selection due to the vast amount of variables available. By engaging domain experts from different departments like marketing and sales, they gained valuable insights that helped refine their features effectively. This cross-functional collaboration proved instrumental in enhancing the model’s performance.

Furthermore, an e-commerce platform successfully tackled deployment issues by implementing continuous testing and validation procedures post-launch. This proactive approach ensured that any discrepancies or errors were swiftly addressed before impacting user experience or business operations.

Conclusion

In building a machine learning pipeline, challenges are inevitable. However, with the right strategies in place, these obstacles can be overcome to create efficient and reliable models.

By focusing on data collection and preparation, feature selection and engineering, model selection and tuning, as well as deployment and monitoring, teams can address key areas of concern throughout the pipeline development process. Utilizing automation tools can streamline repetitive tasks while collaborating with domain experts ensures that the model aligns with business objectives. Following best practices and standards helps maintain consistency and accuracy, while continuous testing and validation validate the model’s performance over time.